Deep dive into Transformer Model: 2 Powerful Engines Behind Advanced Language Models

The advent of transformer model has been nothing short of revolutionary for the field of natural language processing (NLP). Before the rise of transformers, Recurrent Neural Networks (RNNs) were the standard bearer for handling sequential data. However, the introduction of transformers has vastly outstripped RNN performance on various NLP tasks, triggering a renaissance in generative capabilities of models.

The Power of Contextual Understanding

The true strength of transformer architecture lies in its self-attention mechanism. This allows the model to process and understand the entire input sequence at once, rather than sequentially. The result is an unparalleled ability to grasp the nuances of language, determining the relevance of each word in relation to every other word in a sentence, irrespective of their relative positions.

The self-attention mechanism assigns attention weights across the input, enabling the model to discern who is doing what to whom, and whether a particular detail is pertinent to the broader context. These weights are a critical aspect of the training process, as shown in attention maps, which visually depict the strength of relationships among words in a sentence. For instance, in a sentence like “The teacher gave the student a book,” an attention map might highlight a strong connection between “book” and “teacher” and between “book” and “student,” indicating the roles these entities play concerning the book.

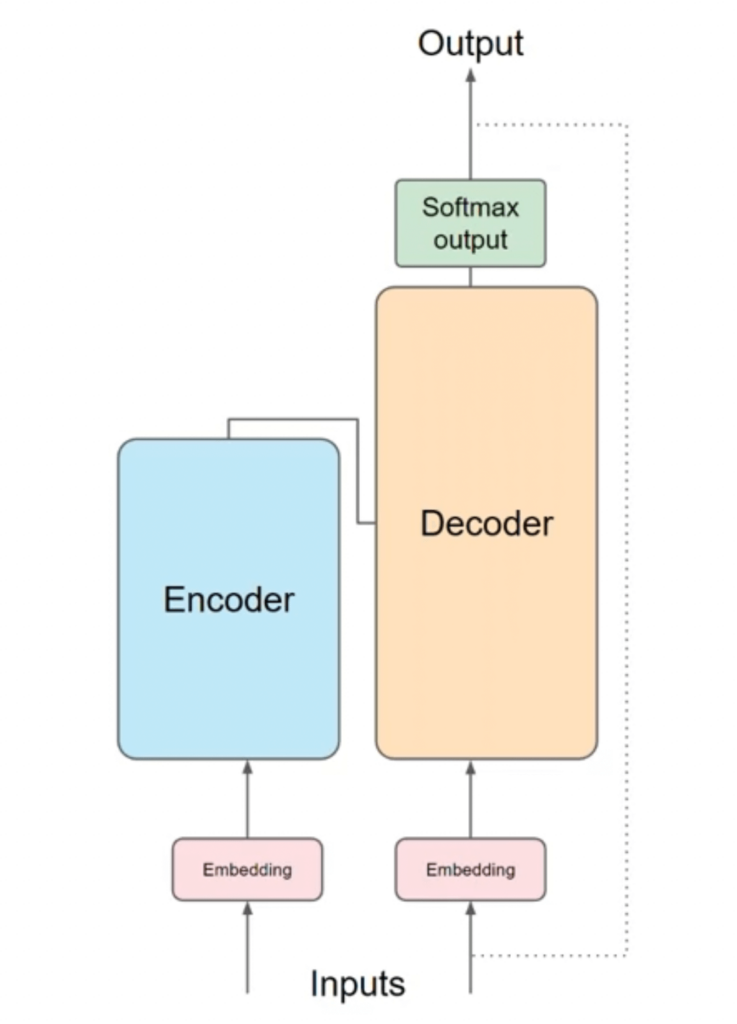

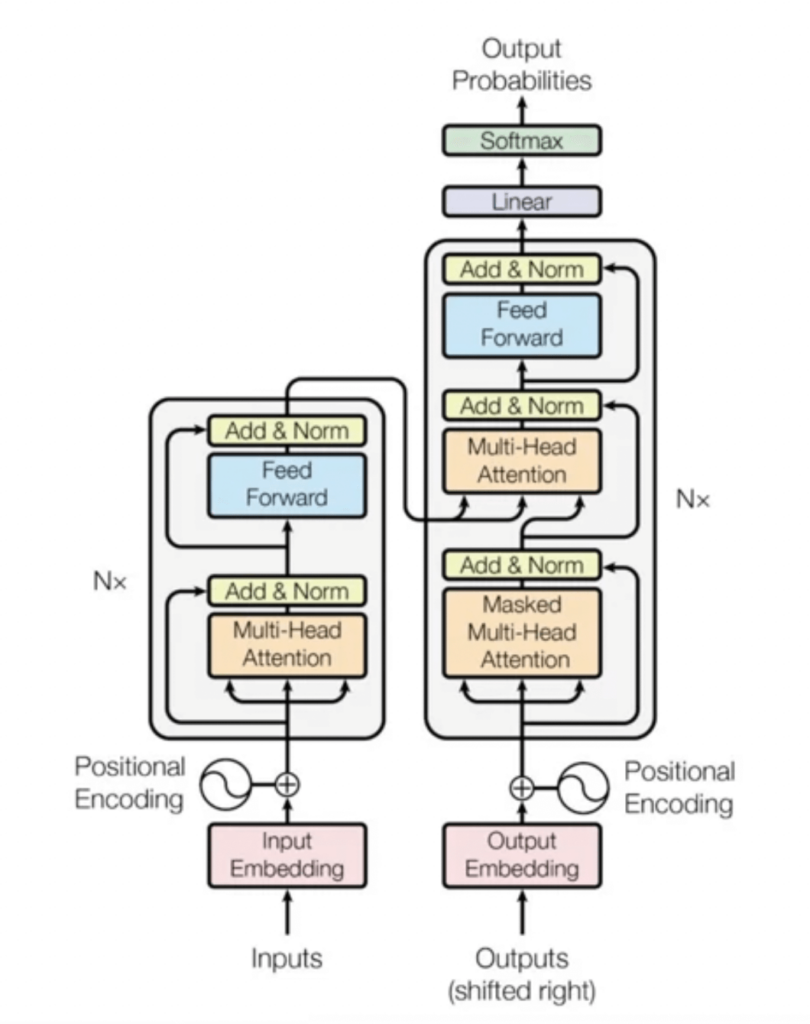

Transformer Architecture: Encoder and Decoder

The transformer model is divided into two primary components: the encoder and the decoder. Each part of the transformer has multiple layers, with both parts mirroring each other in structure but serving different functions.

Encoder

The encoder’s job is to process the input sequence and map it into an abstract continuous representation that holds both semantic and syntactic information about the input. It does so through a series of steps:

- Tokenization: First, the input text is broken down into tokens. Tokenization can be at the word level or subword level, allowing for a more granular representation that can handle a variety of languages and vocabularies effectively.

- Embeddings: Each token is then converted into a numerical form through embeddings, which are high-dimensional vectors that encode the meaning of tokens. The embeddings are learned during training and can capture the semantic relationships between words.

- Positional Encodings: Since the model processes input parallelly, it lacks any notion of word order. Positional encodings are added to the embeddings to give the model information about the position of tokens within the sequence.

- Self-Attention: The encoder then uses self-attention mechanisms to weigh the influence of different tokens within the sequence. This is done multiple times in parallel through what is known as multi-head attention, allowing the model to capture various aspects of language.

- Feed-Forward Networks: Finally, the output from the attention mechanism is passed through feed-forward neural networks to refine the representation further.

Decoder

The decoder, on the other hand, is responsible for generating output based on the encodings passed to it. It follows a similar process to the encoder but includes an additional step of masked self-attention, which ensures that the prediction for a position can only depend on the known outputs at positions before it.

- Masked Self-Attention: This step in the decoder prevents the model from ‘peeking’ at the correct output when making predictions.

- Cross-Attention: The decoder also includes cross-attention layers that attend to the encoder’s output, combining information from both the input sequence and the partially generated output sequence.

- Output Generation: Finally, the decoder produces a sequence of logits, which are then turned into probabilities using a softmax function to predict the next token.

Implications for Large Language Models and Generative AI

With the transformer’s ability to effectively encode and generate language, it has become the foundation for Large Language Models (LLMs) such as GPT-3 and its successors. These models can generate coherent text, translate between languages, summarize content, answer questions, and even write code.

In the generative AI sphere, transformers have enabled the creation of content with a level of fluency and coherence that was previously unattainable. They have found applications in creating artistic compositions, designing novel protein sequences, and even generating synthetic data for training other AI models.

Conclusion

Transformers have fundamentally altered how machines understand and generate human language. With their ability to learn the intricate patterns of language and generate text that resonates with human readers, transformers have set a new standard in AI. As we continue to refine and expand upon transformer models, their potential applications seem limitless, promising even more sophisticated AI tools and systems in the future.