5 Steps to Customized AIGC Applications: Launch Your LLM-Powered App

Do you want to own customized AIGC applications? Here is what you should know.

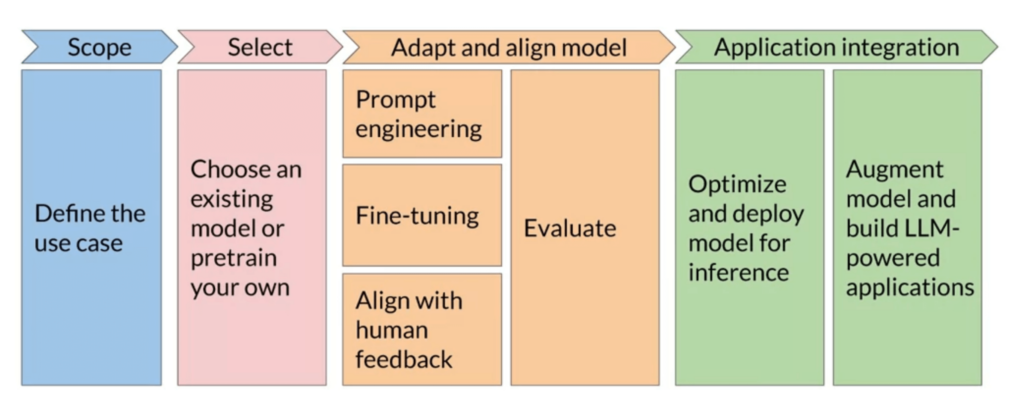

Launching an app powered by a Large Language Model (LLM) involves a complex dance of technical expertise and strategic planning. We’ve distilled the essence of the AIGC application or project lifecycle into a clear, step-by-step guide to help you navigate this journey. This roadmap is designed to take you from the initial idea to a fully functioning AIGC application.

Step 1: Sharpen Your Focus

The success of your AI application begins with a crystal-clear understanding of what you want it to achieve. Start by zeroing in on the specific tasks you want your LLM to handle. Whether it’s crafting long-form content or pinpointing named entities in a dataset, the scope of your project should be defined as narrowly as possible. This precision will not only streamline development but also save you significant time and computational resources.

Step 2: Choose Your Starting Point

With your goals set, it’s time to pick the right model for the job. While the allure of training a bespoke model is strong, the practical approach for most developers is to modify an existing model. This option saves considerable resources and provides a solid foundation for your app. Only venture into training a new model from scratch if your application demands a truly unique AI solution.

Step 3: Tailor Your AI

Even the most advanced LLMs need guidance to perform specific tasks effectively. This is where prompt engineering – the art of crafting inputs that nudge the model towards desired outputs – comes into play. If the LLM falls short, you can fine-tune it through supervised learning, adjusting its parameters directly based on your application’s needs. For those seeking to align the model even more closely with human preferences, reinforcement learning with human feedback is an advanced technique that will fine-tune the AI’s responses to be more in tune with what users expect and desire.

Step 4: Integrate and Optimize for Deployment

When your model is performing up to standard, the next phase is deployment. This is when you integrate the LLM into your application and ensure it’s optimized for the operational environment. Optimization is key for providing users with a seamless experience and making efficient use of your computational resources.

Step 5: Enhance and Overcome Limitations

No AI is perfect. LLMs are notorious for their occasional slips, like inventing information or struggling with complex reasoning. As you prepare for launch, you’ll want to implement strategies to mitigate these weaknesses. This final step ensures that your application performs reliably and maintains the trust of its users.

Embrace the Iterative Nature

Remember, developing an AI application is not a linear process. You’ll likely find yourself revisiting earlier steps, refining your prompts, tweaking your fine-tuning, and continuously evaluating performance to reach the gold standard you’re aiming for.

Keep Evaluating

Through it all, evaluation is your compass. Metrics and benchmarks will help you track how well your model is performing and whether it’s truly meeting your goals. Regular assessment not only steers the development process but also ensures the model remains on course after deployment.

Setting Sail

Armed with this roadmap, you’re now ready to embark on the exciting journey of bringing your LLM-powered application to life. While the path may be complex, breaking it down into these manageable stages will help you navigate the process with confidence and clarity, leading to a successful launch and a valuable tool for your users.